The AI/LLM Data Flaw That's Killing Marketers

We can only measure a very small percentage of how users engage with our brands.

This week’s newsletter is sponsored by: Rankability.comMaybe you’ve heard of this little thing called a large language model (LLM). They power a lot of the AI tools we’re all using right now, like ChatGPT, Claude, and Gemini.

And they’re a pretty massive shift from classic search. Search engines give you a list of options so you can “choose your own adventure.” LLMs give you the answer, usually with citations to back it up.

Sounds great for users. Sure. But for marketers, it’s a migraine in progress.

Because measuring LLM usage is almost impossible right now. If someone gets the answer inside the LLM and never clicks through to your site, you and I don’t get credit, data, or even proof it happened.

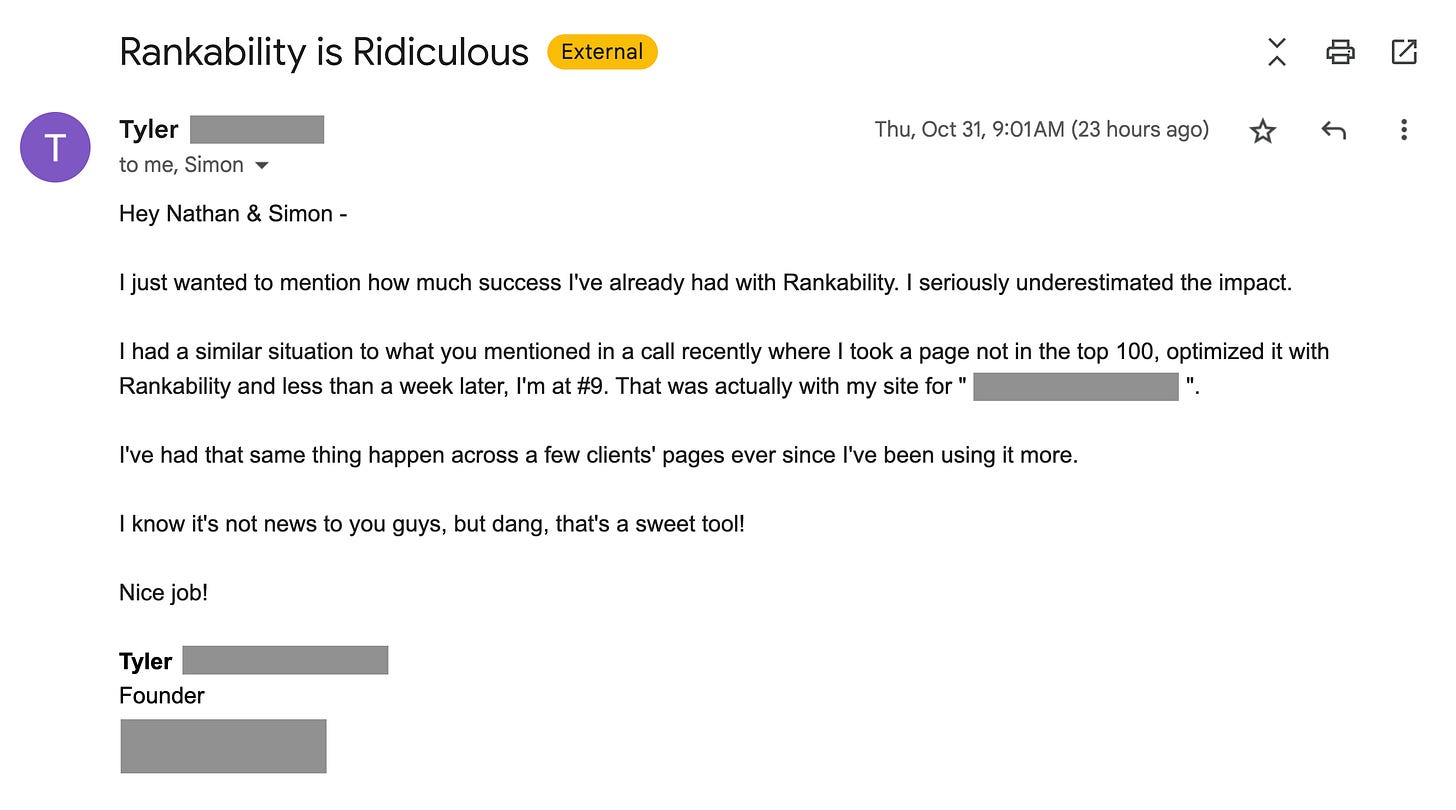

Thank you to this week’s sponsor: Rankability.com

Why Top SEO Agencies Trust Rankability

So Watcha Doin’ on Them GPTs There?

We’re pulling out the Minnesotan accent here. But in all seriousness, the SEO world is deep in “how do we get visibility in LLMs” mode. Some of it is smart. Some of it is… desperate. And some of it is straight-up grifters selling “AI performance” promises they cannot possibly measure.

So what can we measure today?

Not much, and the accuracy is still a question mark.

Clicks from LLMs that show up as referral traffic (big IF, and dependent on how the platform passes referrers)

Citations and mention tracking (useful, but volatile and inconsistent)

That’s it. Two thin signals. Everything else is happening off-site, inside the platform.

The Data We’re Missing (aka the real problem)

Here’s the flaw: LLMs turned huge parts of the customer journey into private conversations. And private conversations do not show up in your analytics stack.

What’s missing compared to search?

Impressions and reach: You don’t know how often your brand or content was surfaced as an answer.

On-platform engagement: Did they read it? Save it? Copy it? Share it? Ask three follow-up questions? No clue.

The path to the decision: You can’t see the chain of prompts that led to your brand being recommended.

On-platform conversions: If the user shortlists options or decides without clicking, your funnel never even starts.

Audience context: Device, geo, new vs returning behavior, cohorts, frequency, recency. Ha, good luck!

This is why LLM reporting feels like trying to measure Netflix using only DVD sales. LLMs are truly their own ecosystem, which we can’t measure (right now.)

Search Engine KPIs vs LLM KPIs

Raise your hand if you consider yourself a performance marketer. Hell yeah. I’ve always hung my hat on measurable outcomes, and I’ve rolled my eyes plenty of times when people tried to sell “soft metrics” as the future of SEO.

Sigh. It’s changing.

Search engines:

User searches, sees results, clicks, engages, converts (or not). Attribution has always been messy, but we still had a trackable journey and directional ROI.

LLMs:

User prompts, gets an answer instantly, and if they are satisfied, the journey ends. No click. No cookie. No remarketing. No analytics trail.

We’re moving from trackable journeys to private conversations. If your entire measurement strategy depends on sessions, LLMs will make your reporting look worse, even as your influence grows.

Why This is Wrecks Marketing Decisions

You know the old question: if a tree falls in the forest and nobody’s around, does it make a sound?

Marketers are living the modern version of that every day. If attribution doesn’t show a session from a specific channel, can we even prove the tactic delivered ROI?

And it gets worse:

Attribution lies by omission: GA4 only captures the minority of users who click through.

Content ROI gets undercounted: your content can influence decisions without driving sessions.

Brand demand gets misread: leadership sees flat traffic and assumes “no impact,” while influence is happening off-site.

I’m fortunate to work with some large brands, and when I reviewed their analytics, LLM referral clicks consistently accounted for less than 2% of total traffic.

The funny part? SEOJobs.com was actually the highest of all accounts I reviewed. The rest of the accounts were split across e-commerce and SaaS, and still barely registered.

Citations and Impressions Citations ≠ Sessions

Last year, I sat on a panel at SMX Advanced and got asked about the value of LLM citations. My response shocked a few people:

“Until my electric company starts accepting impressions as a form of payment, they are completely worthless in my book.”

As fellow performance marketers, that probably did not surprise you, but I’ve adjusted my thinking since then. Not because impressions suddenly pay bills, but because we’re now obligated to quantify influence when clicks disappear. (I call this the halo effect)

The shift is uncomfortable: less “perfect attribution,” more directional evidence, correlation, and narrative-building around total brand performance.

And unfortunately, I think this gets worse before it gets better.

What to do right now (practical playbook),

Quick and dirty. Here’s the playbook.

A) Treat LLMs like dark social

Assume influence without clicks.

Expect underreporting.

Don’t confuse “low referrals” with “no impact.”

B) Set expectations with leadership

Add this disclaimer to reporting:

“LLM referrals reflect only users who clicked through; on-platform engagement is not measurable in GA4.”

C) Use proxy signals (directional)

Pick a few and track them consistently:

Brand search lift (GSC + BWT impressions)

Direct trafficm returning users + homepage performance

Assisted conversions and longer paths

Growth in “unknown/none” first touch

Periodic prompt tests to see what pages get cited

D) Optimize to be the cited source

Put the answer up top. Make entities and authorship obvious. Keep pages fresh and easy to quote.

So, let me ask you: are you seeing LLM influence without the clicks to prove it?

Solid breakdown on the attribution black hole, the dark social comparison is spot on. I'm seeing the same thing with B2B clients where brand search lift and direct traffic become the only real signals left but exec teams still want session-based ROI. The part about citations not paying bills used to be my stance too but youre right that we're being forced into this directional evidence game whether we like it or not.

Impressions/sessions are opportunities and can be tracked and then optimized