My Free ContentKing Alternative to SEO Alerts Using Screaming Frog

Get the alerts you need, skip the Conductor sales pitch. This free Screaming Frog setup does the job

Thanks To This Week’s #SEOForLunch Sponsor: Semrush EnterpriseWhen ContentKing first hit the scene, I was all in. Steven and Vincent built something SEOs had been dreaming about, real-time monitoring with customizable alerts for site changes. Think Swifties scoring Eras Tour tickets level of excitement!

I became a power user overnight. Every client of mine got the same pitch:

“If this tool catches just one rogue noindex tag or a surprise robots.txt update, it’ll pay for itself 100 times over.”

Sure, other tools tried to compete. But in my opinion? Nothing touched what the CK team had built.

Then, like most good things in tech… it got acquired.

This Week’s #SEOForLunch Sponsor is Semrush Enterprise.

AI Mode's 51% Overlap Means Big Opportunities

Google's AI Mode is shaking up the game again. New Semrush research shows 92% of responses cite ~7 domains, with only 51% overlapping traditional top-10 Google results.

Translation? Your brand’s AI visibility operates on completely different rules than organic SEO. But that 49% represents untapped opportunity where smart brands can leapfrog competitors.

Also noteworthy: the intent response patterns, where commercial and transactional queries generated longer responses featuring more sources. There’s extra real estate here.

Winners are already optimizing for both traditional and AI search simultaneously—like with Semrush Enterprise and AI Optimization.

Nobody Likes Change

In 2022, ContentKing was acquired by Conductor. If you know me, you know I’ve never held back my opinions about Conductor. But let’s be 100% clear: I’ve got nothing but respect for what Steven, Vincent, and the team pulled off.

They built something exceptional, got real industry adoption (no small feat), and exited on their terms. That’s the dream. I even told them as much when they personally reached out with the news.

No beef there. Hard work should be rewarded.

Sticking my head in the sand

You know those moments where you don’t love what happened, but you bite your tongue, feign ignorance, and try to keep the peace? That was me. I told myself, “Hey, maybe this turns out fine.”

Shortly after the acquisition was announced, our sponsorship agreement ended. Conductor (understandably) wanted the freedom to promote its full platform in #SEOForLunch. I wasn’t willing to advertise anything beyond ContentKing.

No hard feelings. I saw the writing on the wall.

So I kept paying for my CK subscription, using the tool to monitor SEOJobs.com for external application links that were erroring out.

And I kept pretending not to see the monthly invoice from Conductor quietly hitting my inbox each month.

But then I got this email…

Changes are coming

Nearly three years after the acquisition and barely any public-facing updates to the CK platform, I finally got the email I’d been dreading.

It confirmed what we all suspected.

I was on vacation when it landed in my inbox, and frankly, I wasn’t going to burn energy worrying about it. Still, I sent back a quick reply to confirm what I already knew: my “preferred partner” deal was toast.

It’s been over a few weeks now. No response.

Am I surprised?

Not even a little.

An Alternative Solution

I’ve never been one to shy away from sharing my opinions with the industry. Moments after I sent that email, I published this Tweet/Xweet, whatever the cool kids call it these days.

The response was immediate.

People flooded the thread with suggestions. A handful of tools were close. Some had similar functionality. But every one of them came packed with bloated features I didn’t need and a price tag two to five times higher than what I had been paying.

Then someone suggested it:

“Why not just use scheduled Screaming Frog crawls?”

That’s when the lightbulb went off.

Email Alerts Utilizing Screaming Frog

Both Screaming Frog and Sitebulb are two SEO tools I’ll happily pay for every year. Call them "SEO taxes" if you want, but for anyone serious about SEO, they’re worth every penny.

One of Screaming Frog’s more underrated features is its ability to schedule crawls at whatever interval you want. That’s where my lightbulb moment hit.

Sure, it’s not real-time like ContentKing, but I don’t need real-time. For SEOJobs.com, I just needed a daily crawl (scheduled overnight when traffic is low) and an alert if any outbound application links were broken on any of the current job postings.

Of course, Screaming Frog doesn’t natively send alerts for specific flags (I suspect it’s coming!) But I figured out a workaround to get email notifications only when a change actually warrants action.

Here’s how to replicate my setup:

Setting Up A Scheduled Crawl

I’m not going to reinvent the wheel here. Screaming Frog already has great documentation for setting up scheduled crawls.

That said, there are a few important caveats to make sure the rest of this alert system works. In my setup, I have SEOJobs.com crawl daily at 4 am.

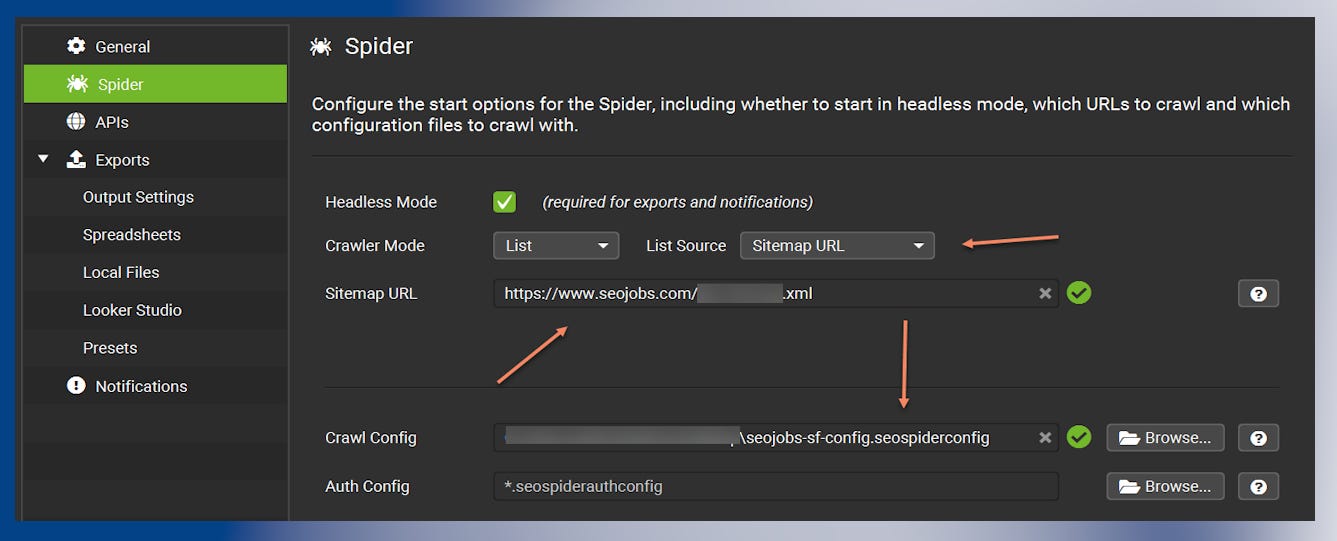

Step 1: Configure your Spider

This part will vary based on what you want to monitor.

For my use case, monitoring external job application links, I built a stripped-down crawl config that pulls in all job listings via the dynamic XML sitemap.

Then, instead of crawling the full site for every detail, I have it only store external link data via the Bulk Export feature.

Make sure to save this config and assign it to your scheduled crawl.

Step 2: Set Up File Export/Storage

You’ll want to use Bulk Export > Response Codes to capture the following reports:

External Redirection (3xx) InlinksExternal Client Error (4xx) InlinksExternal Server Error (5xx) Inlinks

My advice? Keep it simple. Export only what you need to monitor.

You’ll also want to configure the export destination. My Python script (coming next) supports both local and Google Drive storage. I personally run this on a dedicated local machine, and that’s what I’ve QA’d most thoroughly.

Important: The exported reports should be consolidated into one spreadsheet. The Python script expects one file to analyze.

Step 3: Custom Email Alerts When Action Required

The feature I loved most about ContentKing? Custom email alerts.

I could configure notifications based on specific crawl-triggered events. For SEOJobs.com, that meant a heads-up anytime an “Apply Online” button led to a broken external link. Since these links point to third-party job application forms, a dead URL means a terrible user experience and lost opportunity for both the job seeker and employer. I want those listings removed ASAP.

ContentKing handled this beautifully.

Screaming Frog, by contrast, only alerts you when a crawl completes, not when something breaks. That’s not enough for me. I wanted:

An alert only when specific conditions are met (e.g. broken external application links)

The actual issue included in the email body (because I’m lazy and don’t want to open the file)

So, I built a lightweight solution in Python.

It reviews each daily crawl export, scans for erroring external links, and sends me an email with the exact details, only when action is needed.

Step 4: Running the Starter_Kit

Inside the starter_kit you downloaded above, there’s a README.md file that walks you through setup step-by-step.

That said, here are a few key things worth calling out:

4.1: Install Python + Dependencies

If you're new to Python (like I was), don’t stress. The README includes every command you’ll need.

You’ll:

Install Python

Run a few commands to install dependencies (e.g., pandas, smtplib, etc.)

Easy enough.

4.2: Config file

Everything highlighted in yellow should be fairly straightforward. The two other highlights may cause some confusion.

EMAIL_PASSWORD is not the password to the email account if you are using Gmail. You will need to get an APP password (learn more).

You can create your APP password by logging into your Google account here.

The final highlighted field is the location where you have Screaming Frog export your consolidated spreadsheets. This will either be local (my example) or your Google Drive file path.

4.3: Test and schedule Automatic Runs

Before automating anything, you’ll want to test it.

Run a Screaming Frog crawl manually.

Make sure the export generates the same reports you selected during scheduled crawl setup.

Save the exported file in your configured export path.

Then, run the following command in terminal:

python main.py onceIf everything’s set up correctly, you’ll get an email with results only if issues are found.

4.4: Automated Scheduling

Yes, this part is Windows-specific because that’s what I use. #SorryNotSorry

Here’s how to automate the whole process using Windows Task Scheduler:

Note: You will want to configure this to run AFTER the scheduled Screaming Frog crawl. I gave myself a ~20 min buffer, but if your crawl is bigger, you might want to schedule this a few hours out.

You can use Windows Task Scheduler to run the monitor automatically at a specific time (e.g., daily at 4:30 AM).

#### Step-by-Step Instructions

1. **Open Task Scheduler**

- Press `Win + S` and type “Task Scheduler”, then open it.

2. **Create a New Task**

- In the right pane, click **Create Basic Task…**

- Name it something like: `External Link Monitor`

3. **Set the Trigger**

- Choose **Daily**

- Set the start time to **4:30:00 AM** (or your preferred time)

4. **Set the Action**

- Choose **Start a program**

- **Program/script:**

`C:\external-link-monitor\run_monitor.bat`

- (If your folder is different, adjust the path accordingly.)

5. **Finish and Save**

- Click **Finish** to save the task.

6. **(Optional) Test the Task**

- In Task Scheduler, right-click your new task and select **Run** to make sure it works.

Your computer must be on (and not asleep) for this to run. Keep that in mind if you rely on overnight processing.

Step 5: Customize Logic Code (optional)

The Python starter kit I shared is designed specifically to flag external link errors.

But that’s just one use case.

Want to track title tag changes, H1 edits, canonical shifts, or meta description updates? No problem, but it requires a few quick edits across the codebase.

Let’s walk through how you can do this.

A. Update the Parsing Logic

The current code in link_monitor.py is designed to look for external links and their status codes.

To monitor something else (like title tag changes), you’ll need to:

Change which columns are read from the Excel file.

Change the logic that determines what counts as a “new error” or “change.”

Example: Monitoring Title Tag Changes

Suppose your Screaming Frog export has columns:

Address (the URL)

Title 1 (the title tag)

You would:

Parse these columns instead of Source, Destination, and Status Code.

Store the title tag for each URL in your history file.

Alert if the title tag for a URL changes compared to the last run.

B. Update the History File Logic

The history file (external_links_history.json) currently stores link status.

You’ll want to store the relevant data for your new use case (e.g., title tags by URL).

C. Update the Email Alert

Change the email template in config.py to reflect the new type of alert (e.g., “Title Tag Changed”).

3. What Files to Edit

link_monitor.py: Main logic for parsing and detecting changes.

config.py: Update the email template and possibly the subject.

email_sender.py: Only if you want to change how the email is formatted or sent.

4. Example: Detecting Title Tag Changes

In link_monitor.py, you would:

Change the parsing function to read Address and Title 1.

Store {url: title} in the history.

Compare the current title to the previous one for each URL.

If different, add to the alert list.

If you're unsure how to change the logic or syntax, just prompt ChatGPT or Claude like:

“Update this Python script to compare 'Title 1' column instead of link status, and alert me when it changes compared to the previous run.”

You already have the working system. Now it’s just a matter of plugging in new logic for what you care about.

Get Creative, AI Makes Anything Possible

Before you start thinking, “Wow, Nick is a smart cookie,” let me stop you.

This whole system? I built it with the help of AI. I wrote rough logic, asked questions, hit bugs, debugged them, rewrote functions, and kept iterating until it did exactly what I wanted. I didn’t need a fancy degree or hire an expensive developer.

I just needed curiosity and a willingness to tinker.

This is exactly what I mean when I say AI won’t replace SEOs (but lazy ones are toast.)

The people who thrive in this new era are the ones who use AI as a powerful tool, not a shortcut. And guess what? Your boss loves it when you “do more with less,” so you might as well flex this muscle.

So long, ContentKing.

Conductor, it’s been… well, meh.

You couldn’t even make it easy to cancel. Having to contact support to stop paying? Chef’s kiss. 🙄